Running Rust on Android

2021-05-05For one of my current clients, we decided to use Rust as our main programming language. There were several reasons behind this decision; apart from the technical merits, there's also the undisputable fact that Rust is still a relatively new language, fancy and hip – and when you're a startup, using any technology that came out in the previous decade is just setting yourself up to fail. I mean, it's logical – how can you innovate without using innovative tech? The fastest way to success is aboard the hype train.

As one of the product's selling point was supposed to be "you own your data", it couldn't be a purely browser-accessible service, but rather something we'd distribute to the users to run on their own devices. We already had some headless instances running internally, and with a trivial amount of work, were able to make redistributable packages for Windows and Linux. But we knew that being desktop-only would be a serious blocker against adoption – if we wanted this to take off, we'd need mobile versions of the app. This meant we had to figure out how to get our stuff running on Android and, later, on iOS. Seeing how I already had some experience with cross-compiling and build automation, I volunteered to delve into the topic.

Getting the tools

Starting with the basics, I needed to get the Rust cross-compilers. Thankfully, Rust makes this very simple, as it's just a matter of invoking the following:

$ rustup target add armv7-linux-androideabi # For 32-bit ARM.

$ rustup target add aarch64-linux-android # For 64-bit ARM.

# x86_64 is mainly useful for running your app in the emulator.

# Speaking of hardware, there are some commercial x86-based tablets,

# and there's also hobbyists running Android-x86 on their laptops.

$ rustup target add x86_64-linux-android(Note: Any examples further down the road will be shown for aarch64 only.)

I expected that I would also need Android build tools. Having done a little bit of research,

I went over to the Android studio downloads page and grabbed

the command-line tools archive. Despite its 80+ MiB size, the SDK package contains the minimum set of required tools,

so I followed the internet's advice and used the sdkmanager to install recommended extra stuff.

$ cd ~/android/sdk/cmdline-tools/bin/

$ ./sdkmanager --sdk_root="${HOME}/android/sdk" --install 'build-tools;29.0.2'

$ ./sdkmanager --sdk_root="${HOME}/android/sdk" --install 'cmdline-tools;latest'

$ ./sdkmanager --sdk_root="${HOME}/android/sdk" --install 'platform-tools'

$ ./sdkmanager --sdk_root="${HOME}/android/sdk" --install 'platforms;android-29'While Android supports running native code, most apps are written in either Java or Kotlin, and the SDK reflects that. In order to work with native code, I needed one more thing – the Native Development kit. The NDK downloads page offers several versions to choose from – after a little bit of consideration, I decided to go with the LTS version, r21e.

Easy enough! ...or not.

Having taken care of the tools, I decided to just go ahead and try to compile the project.

$ cargo build --target=aarch64-linux-androidAs somewhat expected, the build failed, with an error message spanning the entire screen. After sifting though, it turned out that there was a linking problem:

error: linking with `cc` failed: exit code: 1

/usr/bin/ld: startup.48656c6c6f20546865726521.o: Relocations in generic ELF (EM: 183)

/usr/bin/ld: startup.48656c6c6f20546865726521.o: error adding symbols: file in wrong format

collect2: error: ld returned 1 exit statusEasy enough, I thought – Cargo was trying to use the system linker, instead of the Android NDK linker.

I could use the CC and LD environment variables to point Cargo in the right direction.

$ export ANDROID_NDK_ROOT="${HOME}/android/ndk"

$ export TOOLCHAIN="${ANDROID_NDK_ROOT}/toolchains/llvm/prebuilt/linux-x86_64"

$ export CC="${TOOLCHAIN}/bin/aarch64-linux-android29-clang"

$ export LD="${TOOLCHAIN}/bin/aarch64-linux-android-ld"

$ cargo build --target=aarch64-linux-androidTo my disappointment, this didn't change anything. Not willing to spend the rest of my day wrestling with Cargo, I decided to look around if someone, perhaps, made a ready solution – and quickly found something that looked like the perfect tool for the job.

Enter cargo-apk

cargo-apk is a tool that allows to easily build an .apk from your Cargo project.

All you need to do is install the tool, add some configuration to your Cargo.toml file, and you're ready to go.

# cargo-apk compiles your code to an .so file,

# which is then loaded by the Android runtime

[lib]

path = "src/main.rs"

crate-type = ["cdylib"]

# Android-specic configuration follows.

[package.metadata.android]

# Name of your APK as shown in the app drawer and in the app switcher

apk_label = "Hip Startup"

# The target Android API level.

target_sdk_version = 29

min_sdk_version = 26

# See: https://developer.android.com/guide/topics/manifest/activity-element#screen

orientation = "portrait"Having added the configuration above, I tried building the project with cargo-apk.

$ cargo install cargo-apk

$ export ANDROID_SDK_ROOT="${HOME}/android/sdk"

$ export ANDROID_NDK_ROOT="${HOME}/android/ndk"

$ cargo apk build --target aarch64-linux-androidTo my great surprise, it worked! Well, almost. Once again, it was a linking error. However, this time it wasn't a cryptic error regarding relocations and file formats, but rather a simple case of a missing library:

error: linking with `aarch64-linux-android29-clang` failed: exit code: 1

aarch64-linux-android/bin/ld: cannot find -lsqlite3

clang: error: linker command failed with exit code 1 (use -v to see invocation)Dependencies, dependencies

Of course. Our project used SQLite, which is a C library. While the Rust community is somewhat infamous for touting "Rewrite it in Rust" on every possible occasion, crates for working with popular libraries do not, in fact, reimplement them in Rust, as that would require a colossal amount of effort. Instead, they just offer a way to call the libraries from Rust code, either as a simple re-export of the C functions, or by offering a more friendly API and abstracting the FFI calls a bit. The rusqlite crate we used was no different, meaning that we would need to build SQLite as well.

SQLite uses the GNU Autotools for building. After fumbling a bit with environment variables and options for configure,

I looked a bit through the NDK documentation – and what do you know, I found a page describing how to use the NDK with various build systems,

including the Autotools. Alas, Google being Google, a.k.a.

"the company with the attention span of a goldfish", despite offering an LTS version of the NDK, provides documentation only for the latest

version – and to my peril, things changed between the r21 LTS and latest r22. Thankfully, the Wayback machine had a

historical version of the page,

allowing me to find the instructions appropriate for NDK r21.

$ ANDROID_API=29

$ TOOLCHAIN="${ANDROID_NDK_ROOT}/toolchains/llvm/prebuilt/linux-x86_64"i

$ export CC="${TOOLCHAIN}/bin/aarch64-linux-android${ANDROID_API}-clang"

$ export CXX="${TOOLCHAIN}/bin/aarch64-linux-android${ANDROID_API}-clang++"

$ export AR="${TOOLCHAIN}/bin/aarch64-linux-android-ar"

$ export AS="${TOOLCHAIN}/bin/aarch64-linux-android-as"

$ export LD="${TOOLCHAIN}/bin/aarch64-linux-android-ld"

$ export RANLIB="${TOOLCHAIN}/bin/aarch64-linux-android-ranlib"

$ export STRIP="${TOOLCHAIN}/bin/aarch64-linux-android-strip"

$ ./configure --host=aarch64-linux-android --with-pic

$ make -j $(nproc)Pick me up, Scotty

With that, SQLite built succesfully, giving me libsqlite3.so. Now it was a matter of figuring out how to make Cargo pick it up.

Looking through The Cargo Book, I came across a section on environment variables,

which mentioned RUSTFLAGS. Similar to how CFLAGS and CXXFLAGS are treated by Make or CMake, the contents of RUSTFLAGS are passed

by Cargo to the rustc compiler, allowing to influence its behaviour.

While simple, the approach seemed rather unelegant to me, so I digged a bit further into other options. Looking through The Cargo Book, I came across the section describing project configuration, and sure enough, there is a way to specify RUSTFLAGS. However, no matter what I tried, I kept getting warnings from Cargo, telling me about unused manifest keys.

warning: unused manifest key: target.aarch64-linux-android.rustflagsLooking about some more, I came across the section on build scripts.

They were, no doubt, a powerful tool... but I had already spent a long time fumbling around with Cargo configuration,

and didn't want to spend even more time reading about writing build scripts, so ultimately I decided to just go

with the environment variable solution, and maybe come back to build scripts some time later~ never.

I typed in the command and anxiously watched it execute.

$ RUSTFLAGS="-L $(pwd)/sqlite-autoconf-3340000/.libs/" cargo apk build --target aarch64-linux-androidOnce again, this... kind of worked. While the linker no longer complained about a missing library,

cargo-apk was unable to find it and include it in the resultant APK.

'lib/arm64-v8a/libstartup.so'...

Shared library "libsqlite3.so" not found.

Verifying alignment of target/debug/apk/statup.apk (4)...

49 AndroidManifest.xml (OK - compressed)

997 lib/arm64-v8a/libstartup.so (OK - compressed)

Verification succesfulI took a step back and took a closer look at the error messages produced by the linker, back when I didn't have libsqlite3.so compiled yet.

The linker was combining a lot of object files, all of them located in target/aarch64-linux-android/debug/deps/.

What if I just smuggled the .so file inside there?

$ cp sqlite-autoconf-3340000/.libs/sqlite3.so target/aarch64-linux-android/debug/deps

$ cargo apk build --target aarch64-linux-androidTo my surprise, this worked!

'lib/arm64-v8a/libstartup.so'...

'lib/arm64-v8a/libsqlite3.so'...

Verifying alignment of target/debug/apk/startup.apk (4)...

49 AndroidManifest.xml (OK - compressed)

997 lib/arm64-v8a/libstatup.so (OK - compressed)

15881608 lib/arm64-v8a/libsqlite3.so (OK - compressed)

Verification succesfulI now had an .apk that I could install on an Android phone. Great success!

Of Applications and Activities, or how I painted myself into a corner

With the Rust code compiled to an .apk, all that was left to do was figuring out how to merge that with our Java code, which provided the UI.

In my naïveté, I typed "how to combine APK" in DuckDuckGo. Reading though the top results, it quickly became apparent that

this isn't really possible, at least not in a clean & easy way and without deep knowledge of how Android apps work.

Not all was lost, however, as the articles proposed an alternative approach – combining

the Activities into one application.

If, like me, you've never worked with Android before, then the tl;dr version of "what's an Activity" is basically: it's what you'd call a "screen" or a "view" when designing your app. For example, consider a shopping app:

- The login screen may be one activity.

- The product search screen may be another activity.

- The details page for a chosen product can be another activity.

- The shopping cart can be yet another activity.

- The checkout page, yet another one.

Each of these may contain some interactive elements, like the ubiquitous hamburger menu. If you were so inclined, you could, in theory, put your entire app in a single activity, but this would be rather difficult to work with. Of course, there's still a lot that could be said about activities, but it's not really relevant right now.

Now, back to our adventures in Rust. While the solution to my problem called for combining Activities into one application,

I wasn't really sure how my Rust .apk ties into all of this. After digging a bit through

cargo-apk code,

I realised that what it does is, essentially, wrap my code in some magic glue code, and create

a NativeActivity for Android to run.

To combine the Activities into one app, I needed to tinker with the application's AndroidManifest.xml,

adding an appropriate <activity> node to the document.

But how do I know the properties of the NativeActivity generated by cargo-apk? Luckily for me, when cargo-apk does

its job, it generates a minimal AndroidManifest.xml file and places it right beside the resultant .apk.

A brief look revealed that my NativeActivity's declaration looked like this:

<activity

android:name="android.app.NativeActivity"

android:label="startup"

android:screenOrientation="portrait"

android:launchMode="standard"

android:configChanges="orientation|keyboardHidden|screenSize">

<meta-data android:name="android.app.lib_name" android:value="startup" />

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>All I had to do was take the snippet above and copy-paste it into the manifest of the Java app.

Of course, what this did was merely add a statement to the app's manifest,

saying that the app is going to contain such-and-such activity. The build process for the Java app

was not going to figure out the location of my libstartup.so file and include it automagically.

Fortunately, it turned out that all I needed to do was just

copy the library files to a specific directory,

where Gradle (the build system used with Android app) would pick them up with no questions asked.

$ mkdir -p android/app/src/main/jniLibs/arm64-v8a

$ cp sqlite-autoconf-3340000/.libs/libsqlite3.so android/app/src/main/jniLibs/arm64-v8a/

$ cp target/aarch64-linux-android/debug/libstatup.so android/app/src/main/jniLibs/arm64-v8a/

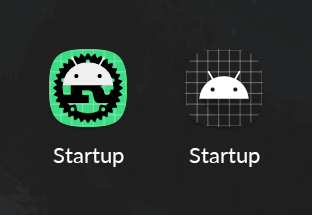

$ cd android/ && ./gradlew && ./gradlew buildHaving done all that, I launched the build – and lo and behold, it worked! I installed the .apk on an Android device I had laying around and... uh-oh.

My application, once installed, produced not one, but two entries in the app launcher. One of them launched the Java UI, while the other launched the NativeActivity containing the Rust code. After reading a bit more about Activities and the AndroidManifest, I learned that the part responsible for this was the <intent-filter> of the NativeActivity – namely the <category> node, declaring that it should be displayed in the launcher. Once I removed it, things went back to the way there were supposed to be, the NativeActivity no longer showing up in the launcher.

There was still, however, one problem: how do I make the Java activities ask the Rust activities to do work for them?

Malicious intents

Activities in Android can, without any problems, launch each other – if that wasn't possible, you couldn't really transfer the user between them. The standard way of calling another Activity is through the startActivity() method, which takes a single argument: an instance of the Intent class.

While the name of the Intent class is self-explanatory, its usage may be a bit unintuitive at first. In its most basic form, it simply holds a reference to the calling Activity instance, and a class handle for the Activity we want to invoke. (To be precise, an Intent requires a calling Context. An Activity is just one type of a Context.)

But an Intent can also be used to convey information describing why one Activity is calling another – such as the

action, which can be used

to differentiate between e.g. "show something" and "edit something";

or the URI of the data to be operated upon,

or its MIME type.

Apart from these precise-purpose get/set method pairs, an Intent can also hold pretty much any amount of "extra" data,

which operates basically as a key-value store (or a dictionary, if you prefer to call it so).

As such, Intents provide a nice, standardized way of passing information between Activities. The caller provides the callee with all the information required for processing its request, and it can receive another Intent in return, holding the requested information. This is all fine and dandy when writing code in Java, but what about my Rust code that gets put into a NativeActivity?

If you look at the inheritance tree, you can see that NativeActivity is a descendant of Activity – which means it has

access to all the (non-private) methods. This meant that I could call getIntent() and retrieve the data from the caller...

except that, since that's a Java method, and I'm operating in native code,

I'd need to use JNI – the Java Native Interface – to perform the function call.

Unfortunately, NativeActivities do not have any alternative mechanisms for passing information or working with Intents.

All of this meant that, much to my dismay, I'd have to look into working with JNI.

Journey into JNI land

At this point, I was quite frustrated with having spent so much time without achieving much in terms of tangible results. On the other hand, I realised that using JNI opened some new possibilities – instead of having to work with Activities and Intents, I could just stick my code in a function and communicate via call arguments and return values. With this new insight, I began my research into working with JNI.

Since, in Java, (almost) everything is an object, and code cannot exist outside of a class – my native code would also have to be part of a class. As I didn't need persistence, it made sense to simply use a static method.

package com.startup.hip;

public class RustCode {

public static native void doStuff();

}Above is a minimal example of a Java class with one static method marked native.

With that in place, I needed to implement the corresponding function. But how do I know the appropriate function signature?

Luckily for me, Java has a feature for generating C header files for JNI purposes.

Until Java SE 9, it was its own separate tool, javah;

later on, it got merged into the main javac compiler executable as the -h option. The option takes one argument,

that being the directory where to put generated .h files. As such, the usage is fairly simple.

$ javac -h ./ RustCode.javaInvoking the above command will create a com_startup_hip_RustCode.h file,

containing the function definition (along with include guards).

#include <jni.h>

JNIEXPORT void JNICALL Java_com_startup_hip_RustCode_doStuff(JNIEnv *, jclass);With this knowledge, I could go ahead and create the appropriate function in Rust.

Flashbacks from C++

When dealing with external code, Rust is very similar to C, as the primary way of doing this is by using extern blocks. Also, similarly to C++, Rust utilises name mangling – not surprising, given the languge's great support for generics and powerful macros. Fortunately, Rust provides an easy way to disable name mangling for an item, using the #[no_mangle] annotation.

use jni::{objects::JClass, JNIEnv};

#[no_mangle]

pub extern "C" fn Java_com_startup_hip_RustCode_doStuff(

_env: JNIEnv,

_class: JClass,

) {}Having created the function stub, I could go on to writing the actual implementation.

Taking in arguments

As it often happens, my native function required to take in some arguments. In this case, it was a string containing a code which would then be passed to the server.

package com.startup.hip;

public class RustCode {

public static native void doStuff(String code);

}After changing the Java code, I regenerated the C header file and edited the Rust code accordingly.

use jni::{objects::JClass, JNIEnv};

#[no_mangle]

pub extern "C" fn Java_com_startup_hip_RustCode_doStuff(

_env: JNIEnv,

_class: JClass,

code: JString,

) {}Simple enough. I now needed to extract the text from the Java String and pass it to further down to Rust code... which proved a bit more complicated than I anticipated. The problem is that the JVM, internally, stores strings using a modified version of UTF-8, whereas Rust strings must be valid UTF-8. While Rust has a type for dealing with arbitrary strings, our code exclusively used "classic" string types, and changing it all would require quite a bit of work.

Fortunately, the jni crate comes with a built-in mechanism for converting between standard UTF-8 and JVM's modified UTF-8,

through the special JNIStr type.

After digging a bit through the documentation, I came up with the following code:

// Convert from JString – a thinly wrapped JObject – to a JavaStr

let code_jvm = env.get_string(code).unwrap();

// Create a String from JavaStr, causing text conversion

let code_rust = String::from(code_jvm);I now had a Rust String that I could pass on to the rest of our Rust code. Great success!

Returning values

Taking in arguments was half the story, but I also needed to return a value. Coincidentally, it would also be a string – one representing the value returned by the server.

package com.startup.hip;

public class RustCode {

public static native String doStuff(String code);

}I changed the Java code once again, regenerated the C header file and edited the Rust code accordingly.

use jni::{objects::JClass, JNIEnv};

#[no_mangle]

pub extern "C" fn Java_com_startup_hip_RustCode_doStuff<'a>(

env: JNIEnv<'a>,

_class: JClass,

code: JString,

) -> JString<'a>

{

// function body here

}As you can see, the return value in JNI is still handled as, well, a return value.

All that was left to do now was to create the JString holding the result.

Similarly to get_string(), the JNIEnv struct also contains a new_string() function,

which does exactly what the name suggests.

// Copy-pasted from earlier snippet

let code_rust = String::from(env.get_string(code_jni).unwrap());

let result = match some_rust_function(code_rust) {

Ok(value) => format!("OK {}", value),

Err(e) => format!("ER {:?}", e),

};

return env.new_string(result).unwrap();And just like this, my JNI wrapper was complete. I was now able to call a Rust function from Java code, passing a value to the call and receiving a value in return.

Error handling, the Rust way

While the code did what it was supposed to, I didn't quite like the number of .unwrap() calls present.

After all, error handling is a big part of Rust, and just because I'm doing language interop,

doesn't mean that I'm allowed to ignore the matter. Quite the contrary – I'd argue that the contact surface

of the two languages should be as foolproof as possible, as to prevent situations where some obscure bug

ultimately turns out to have arisen from bad interop. Also, having to inspect the return value on the Java side

to determine whether the call was successful or not made the whole thing a bit clunky to use.

Instead of reinventing the wheel, I thought a bit on how to best translate Rust's

Result<A,B> approach to Java side.

Luckily for me, return values from my Rust functions were all strings. As for errors,

most of them were either non-recoverable, or caused by bad inputs – which meant that I could forego

having precise error codes and just rely on nicely formatted error messages... which again, means strings.

Thus, Result<A, B> became Result<String, String>.

Defining the Java class

While Java has support for generics (though they're a bit of a cheat),

I didn't feel like digging into the specifics of using them from JNI, so limiting myself to a single

interface made things simpler. I decided to create a Java class that roughly represents the Result<String, String> semantics.

public class Result {

private boolean ok;

private String value;

public Result(boolean is_ok, String value) {

this.ok = is_ok;

this.value = value;

}

public boolean isOk() {

return this.ok;

}

public boolean isError() {

return !this.ok;

}

public String getValue() {

return this.ok ? this.value : null;

}

public String getError() {

return this.ok ? null : this.value;

}

}While this got the job done, compared to its Rust counterpart, it had some drawbacks – the most egregious one

being returning null when one accesses the wrong variant of the result. Since null is a perfectly fine

value for Java strings, calling getValue() without paying attention and passing it somewhere further

could result in a NullPointerException

popping up in a seemingly unrelated piece of code. While this could be easily improved

by throwing an exception, I decided to leave that be as something to be added later never.

Returning an object from JNI

The only thing that was left now was actually returning an instance of my Result class from the Rust function. After searching for a bit, I came across the appropriately-named NewObject() JNI function. This function takes four arguments:

- a handle for the JNI environment

- a handle for the class we want to create

- a constructor signature

- arguments to the constructor

The Rust function receives the JNI environment handle as one of its arguments, so that was already taken care of. The constuctor arguments can be passed as an array, which should be simple enough. I needed to figure out the other two function arguments.

To retrieve a handle for the function, JNI offers the

FindClass() function.

It takes two arguments: the environment handle, and the fully-qualified name of the class – that being,

quite simply, the "import name" of the class, except with periods replaced by slashes.

For example, java.lang.String becomes java/lang/String. In my case, this meant that

com.startup.hip.Result became com/startup/hip/Result.

The constructor signature is a string describing, well, the constructor signature – how many arguments it takes and of which types. At first glance, this seemed a little confusing – but then I remembered that Java supports function overloading, and constructors are also included. Since a class may have multiple constructors, I had to let JNI know which constructor I wanted to use. After searching for a bit on the internet, the easiest solution for learning function signatures that I've found called for compiling the Java class, and then using the Java Disassembler, javap.

$ javac android/app/src/main/java/com/startup/hip/Result.java

$ javap -s android/app/src/main/java/com/startup/hip/Result.class

Compiled from "Result.java"

public class com.startup.hip.Result {

public com.startup.hip.Result(boolean, java.lang.String);

descriptor: (ZLjava/lang/String;)V

public boolean isOk();

descriptor: ()Z

public boolean isError();

descriptor: ()Z

public java.lang.String getValue();

descriptor: ()Ljava/lang/String;

public java.lang.String getError();

descriptor: ()Ljava/lang/String;

}Having ran the commands above, I now knew the signature I wanted to use: (ZLjava/lang/String;)V.

With all the pieces in place, it was time to create the array holding the constructor arguments and call NewObject().

fn create_java_result<'e>(

env: &JNIEnv<'e>,

is_ok: bool,

value: &str,

) -> JObject<'e>

{

let class = env

.find_class("com/startup/hip/Result")

.unwrap();

let args: [JValue<'e>; 2] = [

JValue::Bool(u8::from(is_ok)),

JValue::Object(JObject::from(env.new_string(value).unwrap())),

];

env.new_object(class, "(ZLjava/lang/String;)V", &args)

.unwrap()

}Et voilà! I could now return instances of my custom Result Java class from native functions.

Using a more generic solution

While the code above serves its purpose well, it has one drawback: it explicitly takes a boolean and a string,

requiring the caller to handle the Result themselves and call the function with the appropriate arguments.

Writing the "return early on error" logic is tedious, but thankfully, Rust has a solution for that –

the question mark operator.

However, our code called functions from different libraries, which, in turn, used different error types –

which meant we couldn't use Result<OurType, OurError> and would have to do something akin to

Result<OurType, std::error::Error> – and this is not possible, as Rust doesn't allow traits to be used

as function return types.

The standard solution to this problem is to use Box<dyn Trait>,

but to make things easier, I decided to use the anyhow crate,

which allowed to mix-and-match erros as I pleased.

With anyhow, I could write my code like this:

fn rust_result_to_java_result<'e, T>(

env: &JNIEnv<'e>,

result: anyhow::Result<T>,

) -> JObject<'e>

where

T: Display,

{

let (is_ok, value) = match result {

Ok(v) => (true, format!("{}", v)),

Err(e) => (false, format!("{:?}", e)),

};

create_java_result(env, is_ok, value)

}

fn actually_do_stuff<'a>(

env: JNIEnv<'a>,

code: JString,

) -> anyhow::Result<String>

{

let code = String::from(env.get_string(code)?);

let intermediate_value = some_rust_function(code)?;

other_rust_function(intermediate_value)

}

#[no_mangle]

pub extern "C" fn Java_com_startup_hip_RustCode_doStuff<'a>(

env: JNIEnv<'a>,

_class: JClass,

code: JString,

) -> JObject<'a>

{

rust_result_to_java_result(actually_do_stuff(env, code))

}Much easier! I could now return any kind of Result I wanted,

and have it converted to an instance of the Java class to be consumed by the Java code.

Wrapping it up

Getting our Rust code running on Android wasn't an easy task, but I'm rather satisfied with the solution

I eventually arrived at. We'd write our code in bog-standard Rust and compile it to a shared library,

which would then be loaded by the JVM at runtime.

While the JNI seemed a bit intimidating at first, using this standardised solution

meant that neither the Java code, nor the Gradle build system cared one bit about the fact that our native

code was written in Rust. Cross-compiling with Cargo was still a bit tricky,

as it turned out that cargo-apk set

a lot of environment variables

to make the whole thing work.

There was also the issue of the external libraries our code depended on –

but all of this could be solved with just a bunch of shell scripts.

If you want to try this out for yourselves, I have prepared a public GitHub repository containing a minimal Android app with parts written in Rust, with an additional dependency on an external C library. The licence on that little thing is zlib, so feel free to grab the code and use it for your purposes.

Addendum: Using a Gradle plugin

One of my readers pointed out that there is actually a Rust plugin for Gradle, which trivializes cross-compilation and allows for better handling of dependencies. They even created a GitHub repository showing how to set up the build process using said plugin. Unsurprisingly, it contains a lot less of flaky plumbing code.

References

- Android NDK documentation: other build systems: Autoconf

- crates.io: cargo-apk

- cargo-apk: ndk-glue/src/lib.rs

- cargo-apk: nkd-build/src/cargo.rs

- Android developer documentation: app manifest: <activity>

- Android developer documentation: Activity

- Android developer documentation: NativeActivity

- Android developer documentation: Intent

- crates.io: jni

- Java SE 11: JNI specification

- Java SE 9: tools: javah

- The Rust Programming Language: Calling Rust Functions from Other Languages.

- Java SE 11: tools: javap

- Thorn Technologies: Using JNI to call C functions from Android Java

- Code Ranch: How to create new objects with JNI

- Stack Overflow: Java signature for method

Comments

Do you have some interesting thoughts to share? You can comment by sending an e-mail to blog-comments@svgames.pl.